A TODO app in 3 lines of Python & GPT-3

Yup, another generative AI post!

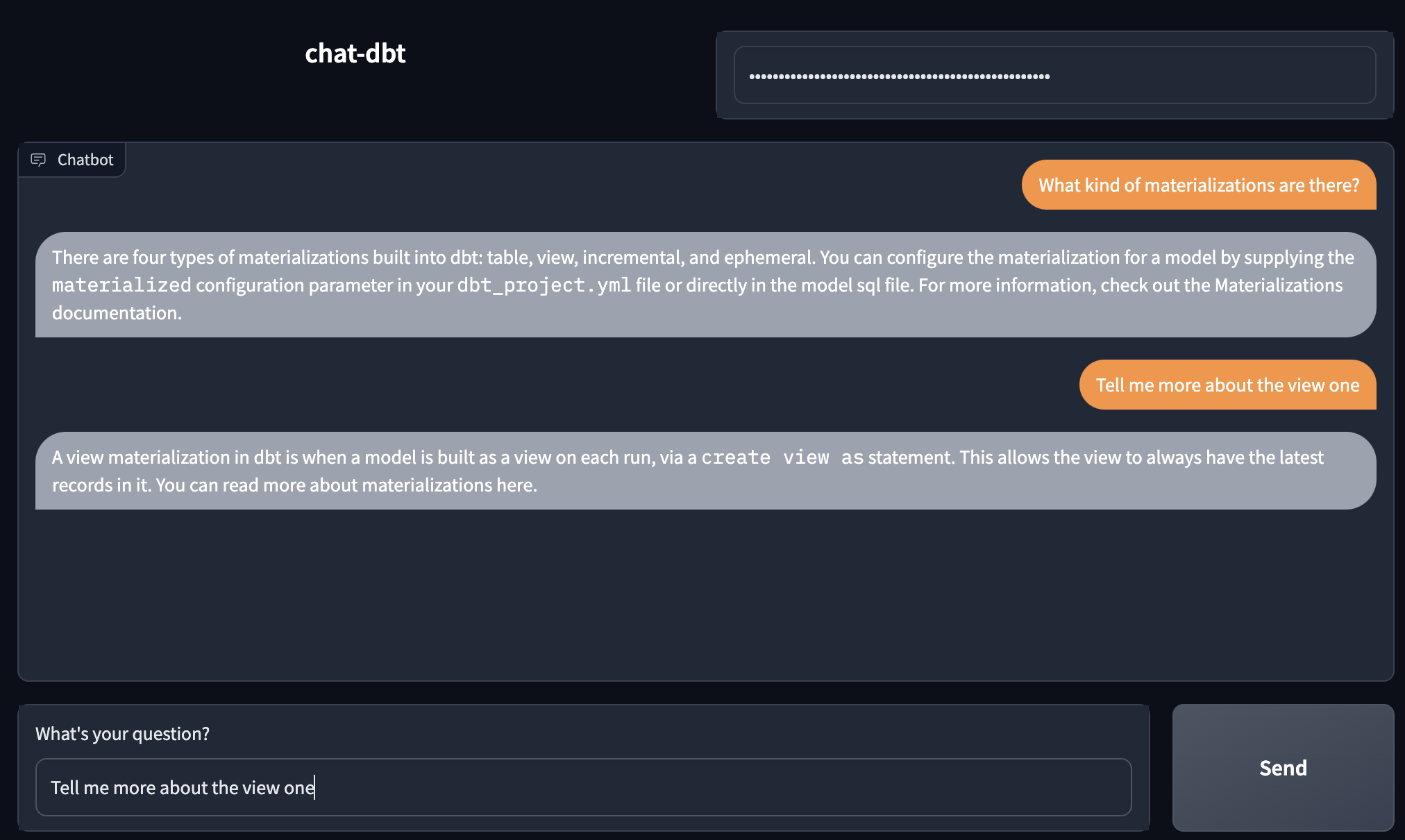

Lately, I've been having a lot of fun playing around with generative AI tools, mainly the GPT-3 LLM through OpenAIs API. One example is the chat-dbt application, which exposes a ChatGPT-like interface that allows the user to get information about dbt, by ingesting all of its documentation pages into a vector store and combining similarity search with GPT-3 it can answer any question (with a lot of confidence!)

This uses the incredibly powerful Langchain library to wrap both the vector store and the OpenAI API.

Langchain is a Python library that enables developers to easily implement applications around LLMs by abstracting away most of the gritty parts and providing a clean interface. If you are interested in LLM-based application development, I urge you to give Langchain a try!

The idea

Langchain also exposes a component called SQLDatabaseSequentialChain, which enables an LLM to answer questions of a relational database. Basically, the LLM will be able to translate the incoming questions/commands to valid SQL statements, run them and return the result.

Chains go beyond just a single LLM call, and are sequences of calls (whether to an LLM or a different utility). LangChain provides a standard interface for chains, lots of integrations with other tools, and end-to-end chains for common applications.

Obviously, the next step has to be to do a little prompt engineering and wrap an API endpoint around our code and voilà, we have a fully functioning todo app, ready for production! Alright, maybe not that last part.

Let's see how it actually looks.

The code

As promised, 3 lines of code:

First, we grab our prompt template

p = PromptTemplate(input_variables=["input", "table_info", "dialect"], template=open("command.template").read())Second, we run the chain using GPT-3 from OpenAI over our database

chain = SQLDatabaseChain(llm=OpenAI(temperature=0), database=SQLDatabase.from_uri("sqlite:///todos.db"), prompt=p)And finally, parse and return the results!

json.loads(chain.run(command.text).split("JSON: ")[1])There are a few other lines of boilerplate, but I needed the catchy title 👹, see the repo for the full codebase at the end of the article.

The prompt template that GPT-3 received looks like this:

You have to act as a backend server for a web application that allows users to manage TODO lists.

You receive requests and based on the type and content of the request, you have to update the database state and return

a response.

Given the request, first create a syntactically correct {dialect} query to run, then look at the results of the query and return the answer.

Use the following format:

Question: "Question here"

SQLQuery: "SQL Query to run"

SQLResult: "Result of the SQLQuery"

Answer: "Final answer here"

JSON: "JSON response here, list of objects"

Only use the following tables:

{table_info}

If the request is about needing to do something, add the task to the database as a new TODO item.

Question: {input}This instructs the model on how to interpret the incoming request, run the SQL query, and how to return the results.

The sequence of actions behind the scene is handled by langchain, so we don't have to do anything ourselves!

Does this really work?

Alright, let's test it out! We can start our FastAPI server by running python main.py & it's ready to accept requests!

Let's get all existing todos in the database

curl -X POST -H "Content-Type: application/json" -d '{"command": "Get all todos"}' http://localhost:8000/todo | jq[

{

"id": 4,

"name": "Buy cheese",

"done": 0

},

...

{

"id": 13,

"name": "buy bread",

"done": 0

}

]Nice! We can also verify that these are the actual records in the database by peeking into the SQLite file.

A very interesting feature of using an LLM as the backend for an API is that we can use various commands to achieve the same results. For example, all of the following instructions would make the model return all the existing items.

- Give all todos

- Give all

- Show me every item

- What items are in the db?

And the list goes on...

Let's try adding a new item.

curl -X POST -H "Content-Type: application/json" -d '{"command": "I need to buy bread"}' http://localhost:8000/todo | jq{

"message": "The task has been added to your TODO list."

}Great, with some more prompt engineering we could get the response to hold the actual new item!

What if we want to mark an item as complete?

curl -X POST -H "Content-Type: application/json" -d '{"command": "Mark buy bread item as done"}' http://localhost:8000/todo | jq{

"message": "The item 'buy bread' has been marked as done."

}If we enable verbose output in the chain, we get to see how GPT-3 manages the input to get the desired output.

for example for this request

curl -X POST -H "Content-Type: application/json" -d '{"command": "Get all"}' http://localhost:8000/todo | jqin the logs, we can see the following output

> Entering new SQLDatabaseChain chain...

Get all

SQLQuery: SELECT * FROM todos

SQLResult: [(1, 'Buy milk', 0), (2, 'Buy eggs', 0), (3, 'Buy bread', 0), (4, 'Buy cheese', 0), (5, 'Buy butter', 0), (6, 'learning_kafka', 0), (7, 'learning_kafka', 0), (9, 'buy milk', 0), (10, 'buy bread', 1)]

Answer: 14 TODO items found

JSON: [{"id": 1, "name": "Buy milk", "done": 0}, {"id": 2, "name": "Buy eggs", "done": 0}, {"id": 3, "name": "Buy bread", "done": 0}, {"id": 4, "name": "Buy cheese", "done": 0}, {"id": 5, "name": "Buy butter", "done": 0}, {"id": 6, "name": "learning_kafka", "done": 0}, {"id": 7, "name": "learning_kafka", "done": 0}, {"id": 9, "name": "buy milk", "done": 0}, {"id": 10, "name": "buy bread", "done": 1}]

> Finished chain.

As we can see, the query looks correct and the results match what we expect.

If we tell the API Buy Eggs task is done

The output looks like this:

> Entering new SQLDatabaseChain chain...

Buy Eggs task is done

SQLQuery: UPDATE todos SET done = 1 WHERE name = 'Buy Eggs';

SQLResult:

Answer: The task 'Buy Eggs' has been marked as done.

JSON: { "message": "The task 'Buy Eggs' has been marked as done." }

> Finished chain.Exactly as expected.

The future is now, old person!

While not completely sophisticated yet, LLMs are an increasingly powerful tool for everyone, including developers. New use cases are popping up every day and with improved models, who knows what else we can unlock.

Check out the full code with the prompt in this repository.

Member discussion